Lyapunov stability is an important notion for dynamical systems, which has been absent in the optimization literature. When studying optimization methods, the goal has always been proving convergence. However, convergence to critical points could sometimes be unrealistic or undesirable in practice. In this talk, I will introduce the notions of Lyapunov stability for optimization methods. I will then describe my works on characterizing the local and global stability of first-order methods. My results apply to problems arising in data science. In particular, I provide a guarantee of the SGD optimizer for training neural networks.

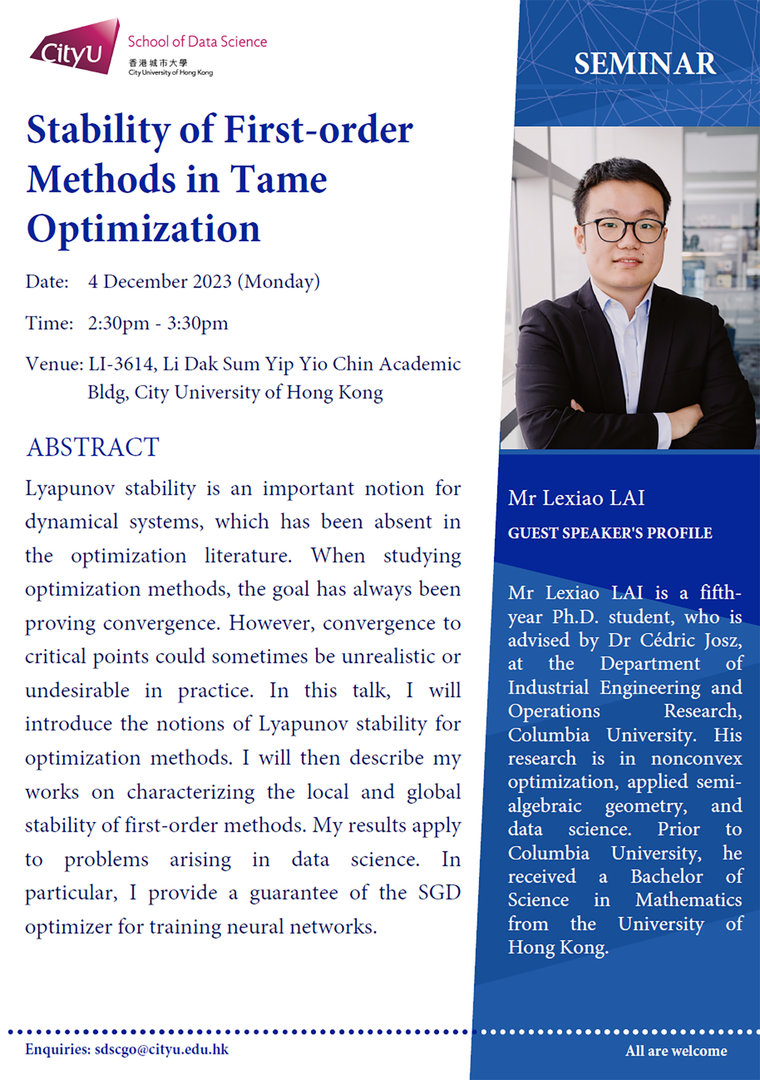

Speaker: Mr Lexiao LAI

Date: 4 December 2023 (Monday)

Time: 02:30pm – 03:30pm

Poster: Click here

Latest Seminar

Biography

Mr Lexiao LAI is a fifth-year Ph.D. student, who is advised by Dr Cédric Josz, at the Department of Industrial Engineering and Operations Research, Columbia University. His research is in nonconvex optimization, applied semi-algebraic geometry, and data science. Prior to Columbia University, he received a Bachelor of Science in Mathematics from the University of Hong Kong