We investigate robust mean estimation through utilizing different loss functions, including the Huber, Catoni (Catoni, 2012), and two newly defined loss functions created to gauge the performance of over- or underestimating important ``spread'' parameters. We allow $E|x_i|^q < \infty$, where $1<q\leq 2$. This is a new contribution to the literature, when only the case of $q=2$ has been studied, i.e., the robust mean estimation with the existence of at least second order moments. This development is particularly important when we are estimating higher order moments themselves, like the kurtosis of financial return data. With our proposed robust mean estimator, we create a new eigenvalues-regularized covariance matrix estimator under the assumption $p/n \rightarrow c>0$, where $p$ is the dimension and $n$ is the sample size. This estimator needs only the existence of up to the $\xi$th order moments, $2<\xi\leq 4$, which is a huge relaxation compared to requiring the existence of 12th order moments in Lam (2016). Theoretical properties are analysed through a newly defined relative loss function, and extensive empirical studies and comparisons with contemporary estimators like the Huber-type M-estimator and rank-based estimator analyzed in Avella et al (2018) are performed, showing the superiority of our estimator. A set of ionosphere data is also analyzed in the end.

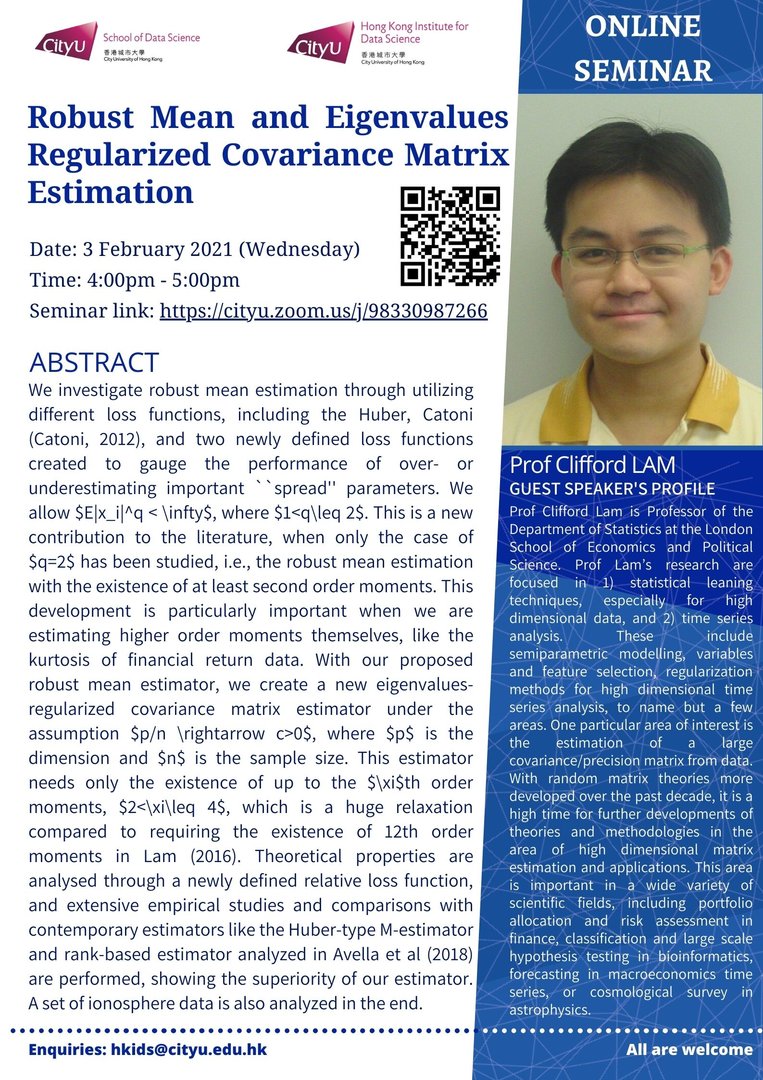

Speaker: Professor Clifford LAM

Date: 3 February 2021 (Wed)

Time: 4:00pm – 5:00pm

Poster: Click here

Latest Seminar

Biography

Prof Clifford Lam is Professor of the Department of Statistics at the London School of Economics and Political Science. Prof Lam’s research are focused in 1) statistical leaning techniques, especially for high dimensional data, and 2) time series analysis. These include semiparametric modelling, variables and feature selection, regularization methods for high dimensional time series analysis, to name but a few areas. One particular area of interest is the estimation of a large covariance/precision matrix from data. With random matrix theories more developed over the past decade, it is a high time for further developments of theories and methodologies in the area of high dimensional matrix estimation and applications. This area is important in a wide variety of scientific fields, including portfolio allocation and risk assessment in finance, classification and large scale hypothesis testing in bioinformatics, forecasting in macroeconomics time series, or cosmological survey in astrophysics.