In this talk, we study a stochastic nonconvex optimization problem that arises from supply chain and revenue management. Leveraging an implicit convex reformulation (i.e., hidden convexity) via a variable change, we develop stochastic gradient-based algorithms and establish their sample and gradient complexities for achieving an-global optimal solution. Interestingly, our proposed Mirror Stochastic Gradient (MSG) method operates only in the original space using gradient estimators of the original nonconvex objective and achieves sample complexities, which matches the lower bounds for solving stochastic convex optimization problems. In air-cargo network revenue management (NRM) problem, extensive numerical experiments demonstrate the superior performance of our proposed MSG algorithm for booking limit control with higher revenue and lower computation cost than state-of-the-art bid-price-based control policies, especially when the variance of random capacity is large.

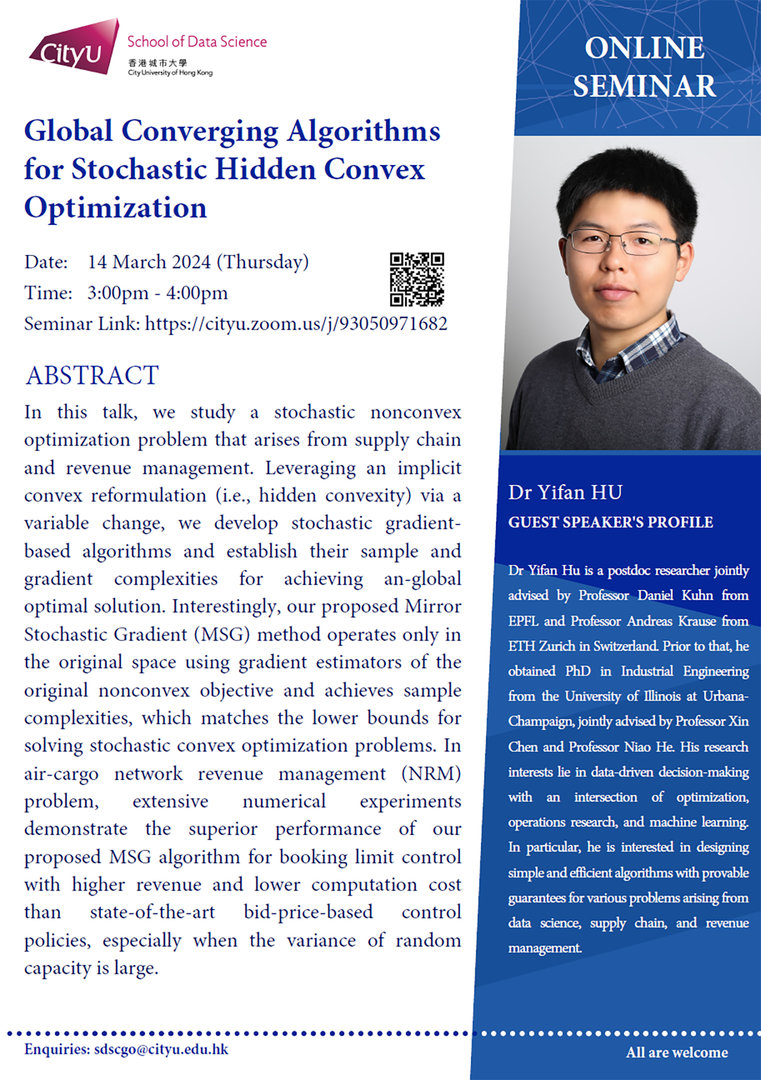

Speaker: Dr Yifan HU

Date: 14 March 2024 (Thursday)

Time: 3:00pm – 4:00pm

Poster: Click here

Latest Seminar

Biography

Dr Yifan Hu is a postdoc researcher jointly advised by Professor Daniel Kuhn from EPFL and Professor Andreas Krause from ETH Zurich in Switzerland. Prior to that, he obtained PhD in Industrial Engineering from the University of Illinois at Urbana-Champaign, jointly advised by Professor Xin Chen and Professor Niao He. His research interests lie in data-driven decision-making with an intersection of optimization, operations research, and machine learning. In particular, he is interested in designing simple and efficient algorithms with provable guarantees for various problems arising from data science, supply chain, and revenue management.