In this talk, we present some recent results on the approximation theory of deep learning architectures for sequence modelling. In particular, we formulate a basic mathematical framework, under which different popular architectures such as recurrent neural networks, dilated convolutional networks (e.g. WaveNet), encoder-decoder structures can be rigorously compared. These analyses reveal some interesting connections between approximation, memory, sparsity and low rank phenomena that may guide the practical selection and design of these network architectures.

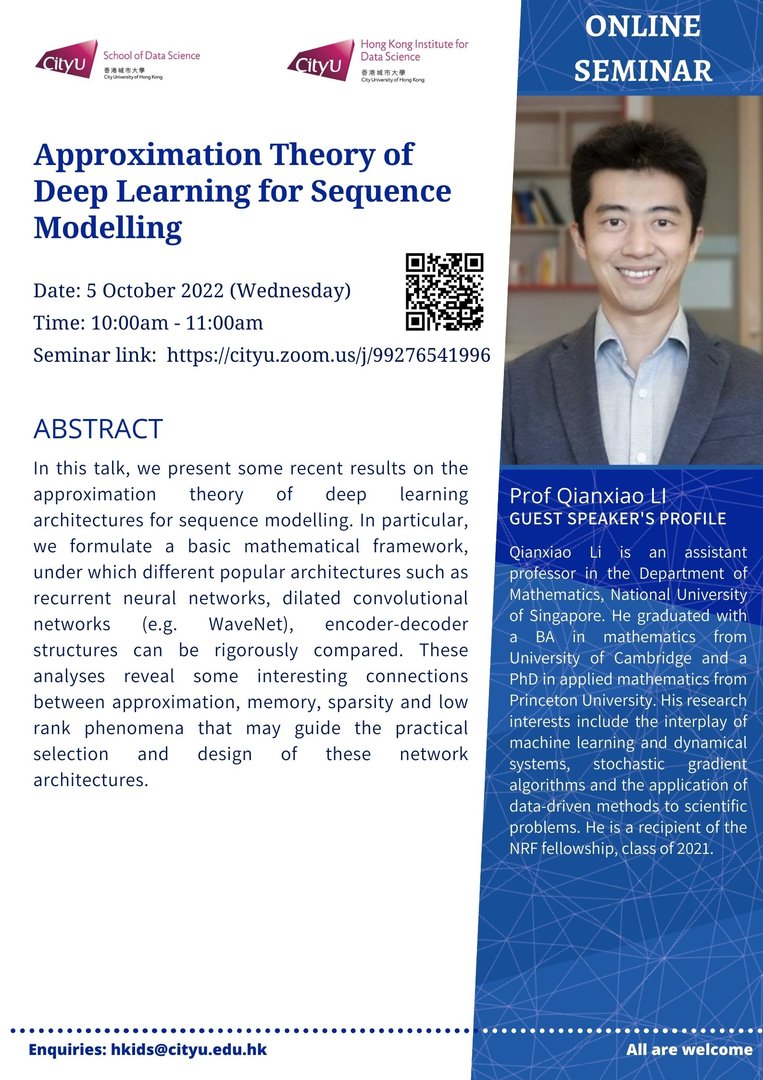

Speaker: Prof Qianxiao LI

Date: 5 October 2022 (Wed)

Time: 10:00am – 11:00am

Poster: Click here

Latest Seminar

Biography

Qianxiao Li is an assistant professor in the Department of Mathematics, National University of Singapore. He graduated with a BA in mathematics from University of Cambridge and a PhD in applied mathematics from Princeton University. His research interests include the interplay of machine learning and dynamical systems, stochastic gradient algorithms and the application of data-driven methods to scientific problems. He is a recipient of the NRF fellowship, class of 2021.